Research Affiliations

University of Florida

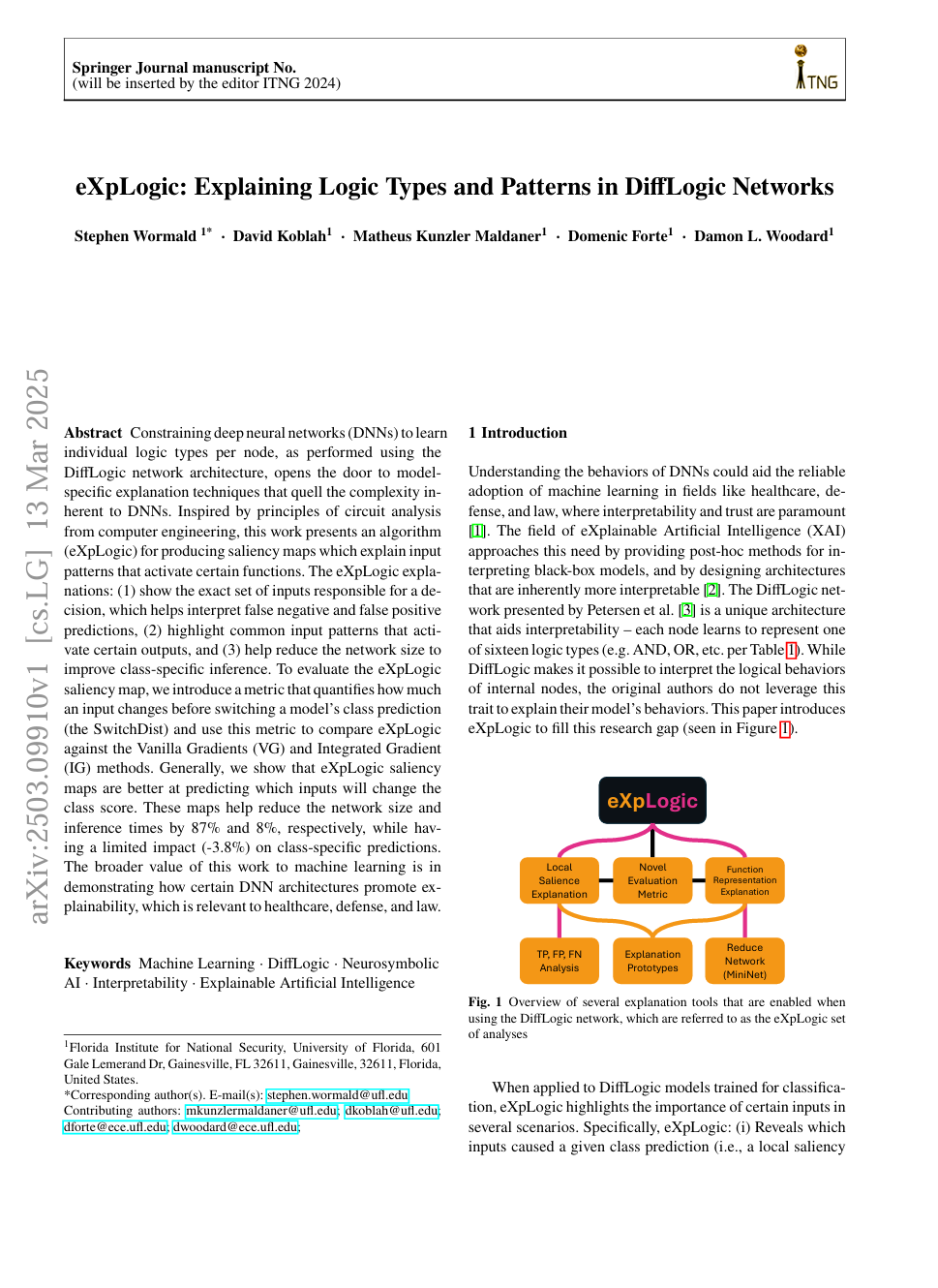

Research at the University of Florida focusing on the fusion of symbolic reasoning with neural networks to enhance AI explainability.

Research Papers