MIRAGE: Multi-model Interface for Reviewing and Auditing Generative Text-to-Image AI

Conference on Human Computation and Crowdsourcing (Works-in-Progress) (HCOMP), 2024

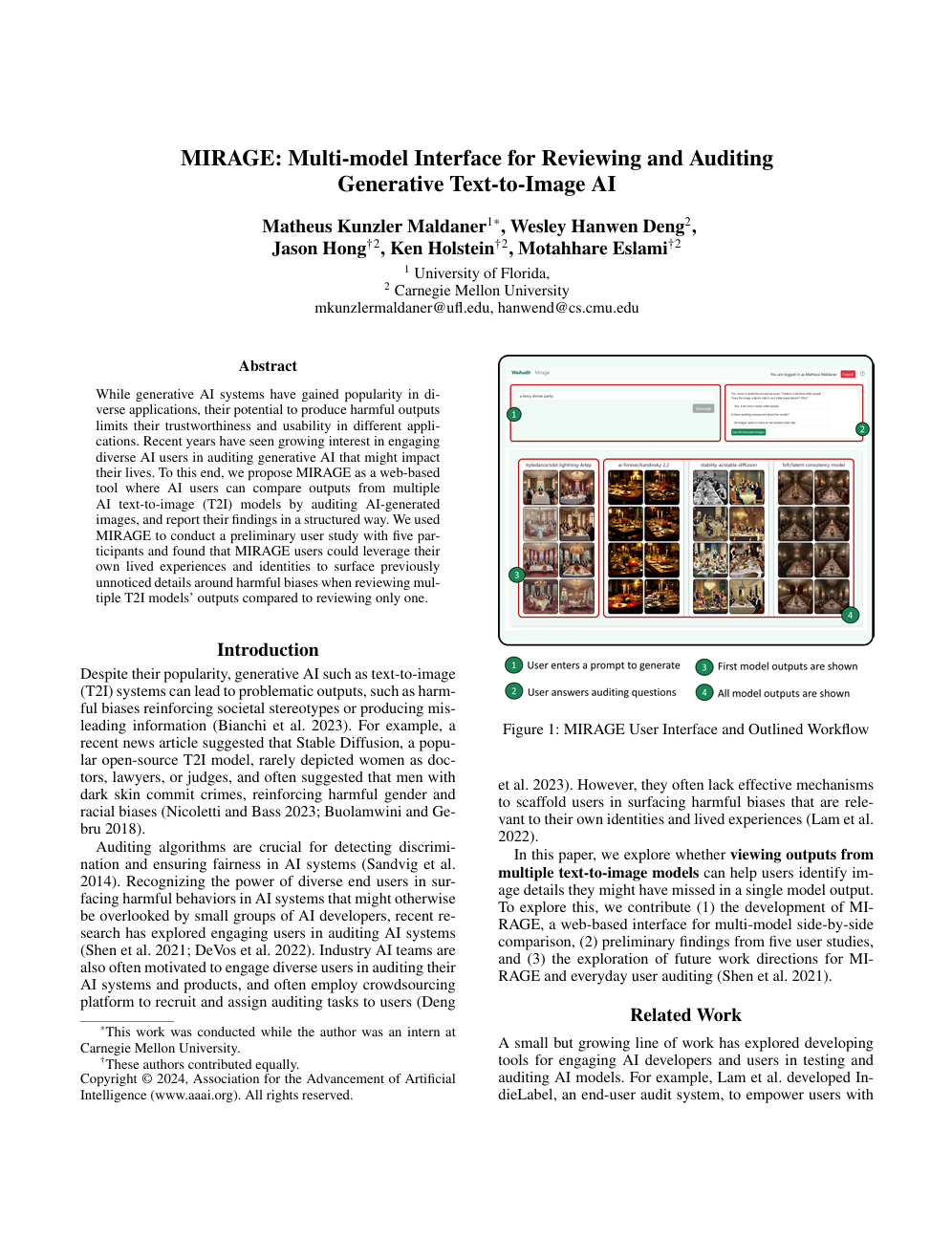

MIRAGE is a web-based auditing workflow that allows participants to contrast outputs from multiple text-to-image (T2I) models on a single canvas. By centering lived experience and supporting side-by-side comparison, MIRAGE helped participants uncover subtle biases in generative models during a preliminary study. The interface offers a structured path for non-AI experts to document and submit model feedback that can inform safer, more inclusive generative AI systems.

@inproceedings{maldaner2024mirage,

title={MIRAGE: Multi-model Interface for Reviewing and Auditing Generative Text-to-Image AI},

author={Maldaner, Matheus Kunzler and Deng, Wesley Hanwen and Hong, Jason I. and Holstein, Ken and Eslami, Motahhare},

booktitle={Proceedings of the ACM Conference on Human Computation and Crowdsourcing (Works-in-Progress)},

year={2024}

}